I've described on how to develop Kubernetes Operator in Python in last article, now it's time to deploy it into Kubernetes Cluster, I'm using AWS EKS, but the deployment method is standard kubernetes way so it could be deployed to GKE or other Kubernetes cluster as well.

step 1 expose python dependencies

we could achieve this by command pipenv lock -r > requirements.txt

step 2 build docker image

the content of the image is as simple as below

FROM python:3.7

LABEL maintainer="email2liyang@gmail.com"

COPY kube_service_dns_exporter.py /kube_service_dns_exporter.py

COPY requirements.txt /tmp

# install extra dependencies specified by developers

RUN pip install -r /tmp/requirements.txt

CMD kopf run /kube_service_dns_exporter.py --verbose

then we could build the docker image and push it to docker hub

docker build -t email2liyang/kube_service_dns_exporter:latest .

docker push email2liyang/kube_service_dns_exporter:latest

the docker image is ready to use in docker hub https://cloud.docker.com/u/email2liyang/repository/docker/email2liyang/kube_service_dns_exporter

step 3 define rbac for the operator

this is important step , because the operator need some specific permission to communicate with kubernetes api, Kopf is using pykube-ng to communicate with kubernetes api. you could checkout my rbac details from rbac.yml. in

kubernetes-service-dns-exporter, I'm just interested in kubernetes service's creation and deletion event and I've defined a service account named kopf to do that

step 4 define kubernetes secret

it's a good practice to place sensitive data into kubernetes secret and just refrence it in deployment, in my case I'm putting my route53 zone id and aws access key and secret key into kubernetes secret, this is just an example of how the kubernetes secret is organised

step 5 define kubernetes deployment

the full deployment yaml file could be found in github. in the deployment yaml file, I'm using the service account kopf to make sure pod has enough permission to communicate with kubernetes api. it's also recommended to set replicas value as 1 because if more than 1 operator for the samee event exist in the cluster, it may result in some un-expected behaviour.

step 6 deploy kubernetes artifact into the cluster

just issue command kubectl apply -f yaml/, we are done, we could check the pod are running well

kubectl get po -n tool

NAME READY STATUS RESTARTS AGE

kube-service-dns-exporter-dd8bc57cb-6gf52 1/1 Running 0 1h

and new event are attached to exist service

kubectl describe svc redis -n tool

Name: redis

Namespace: tool

Labels: app=redis

role=master

tier=backend

Annotations: kopf.zalando.org/last-handled-configuration={"spec": {"ports": [{"protocol": "TCP", "port": 6379, "targetPort": 6379, "nodePort": 30702}], "selector": {"app": "redis", "role": "master", "tier": "backe...

kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"app":"redis","role":"master","tier":"backend"},"name":"redis","namespace":"...

Selector: app=redis,role=master,tier=backend

Type: NodePort

IP: 172.20.53.35

Port: <unset> 6379/TCP

TargetPort: 6379/TCP

NodePort: <unset> 30702/TCP

Endpoints: 10.31.4.218:6379

Session Affinity: None

External Traffic Policy: Cluster

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Logging 5m kopf creating dns for service redis which point to ip 172.20.53.35

Normal Logging 4m kopf All handlers succeeded for creation.

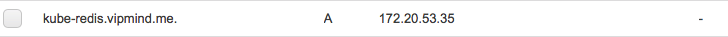

Normal Logging 4m kopf created dns kube-redis.vipmind.me point to 172.20.53.35

Normal Logging 4m kopf Handler 'create_fn' succeeded.

we could also observe that the dns record is created in route53

Enjoy!