aws-lambda-powertools-python is an useful toolset when you develop lambda functions in python, it contains several useful utilities to make lambda development easy.

installation

to install aws-lambda-powertools-python

pip install aws-lambda-powertools

I usually put it in requirements-test.txt because we could include the package as a lambda layer

to include the lambda layer, in SAM template.yaml

xxxEventListener:

Type: AWS::Serverless::Function # More info about Function Resource: https://github.com/awslabs/serverless-application-model/blob/master/versions/2016-10-31.md#awsserverlessfunction

Properties:

CodeUri: .

Role: !Ref IAMRoleARN

Handler: event_listener.handle

Layers:

- !Sub arn:aws:lambda:${AWS::Region}:017000801446:layer:AWSLambdaPowertoolsPython:11

the logger

by default logger would not include python module name in the location field of log, if you are using multiple modules, you could override the log format as below

from aws_lambda_powertools import Logger

location_format = "%(module)s.%(funcName)s:%(lineno)d"

logger = Logger(location=location_format)

then we could include the module information in the log record

we could also log the incoming event by just decorate handle method of the lambda

@logger.inject_lambda_context(log_event=True)

def handle(event, context: LambdaContext):

pass

if we set POWERTOOLS_LOGGER_SAMPLE_RATE as env var, the value is between 0 and 1, it indicate the chance that logger framework sample the log to debug level, it's very useful in production.

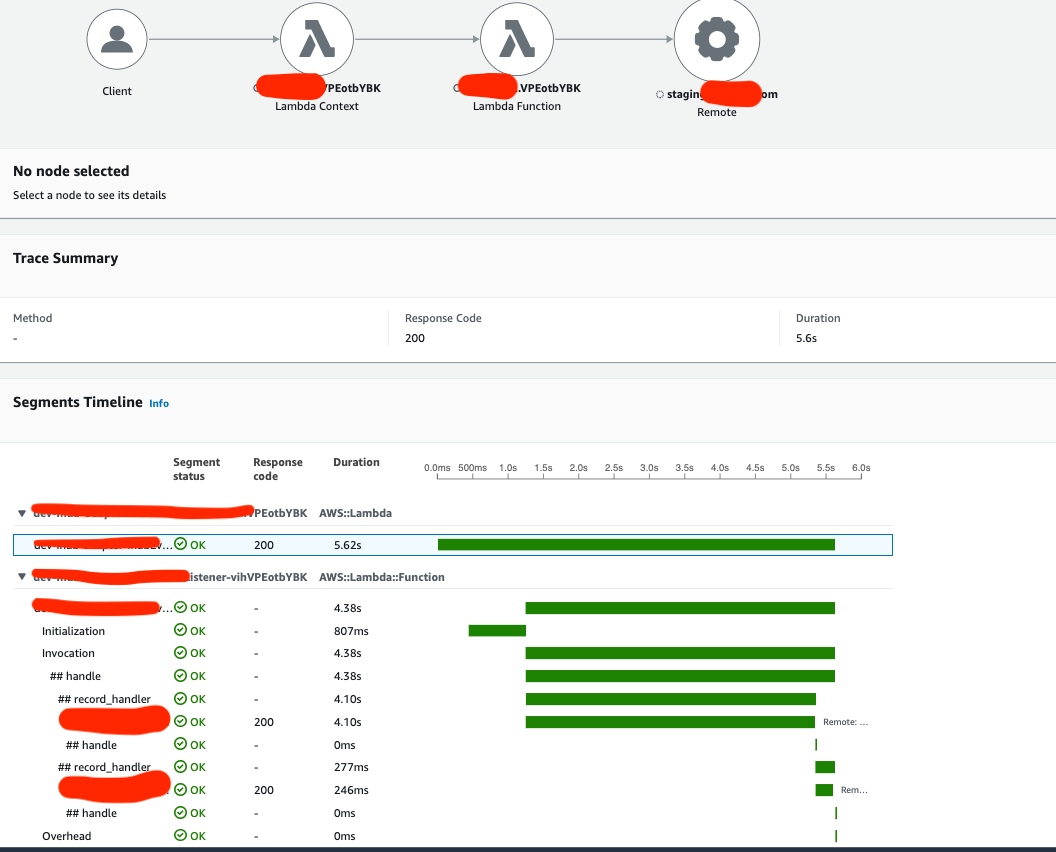

the tracer

we could trace the lambda handler by adding decorator to the method like below

from aws_lambda_powertools import Tracer

tracer = Tracer()

@tracer.capture_lambda_handler

def handle(event, context: LambdaContext):

pass

we could also decorate any method that we want to trace with

@tracer.capture_method

def record_handler(record: SQSRecord):

we could then get trace detail from x-rays, the logger framework would include xray_trace_id in every log, so it's easy to locate the trace in x-ray with the id

the batch

when we use lambda to process message from sqs we will get a batch of messages (e.g 10 messages in a batch) , if any message failed to process, all the messages in this batch will be send back to sqs queue, with batch procsssing tool, we could just send back the failed message.

when we attach a lambda function to a queue, make sure select "ReportBatchItemFailures" in Advanced options.

jupm into the code sample

from aws_lambda_powertools.utilities.batch import (

BatchProcessor,

EventType,

batch_processor,

)

from aws_lambda_powertools.utilities.data_classes.sqs_event import SQSRecord

from aws_lambda_powertools.utilities.typing import LambdaContext

processor = BatchProcessor(event_type=EventType.SQS)

@batch_processor(record_handler=record_handler, processor=processor)

def handle(event, context: LambdaContext):

return processor.response()

def record_handler(record: SQSRecord):

# logic to handle 1 sqs record

pass

the parameter store

one way to manage lambda parameter is to use AWS ssm, we could store the parameter in a tree like structure, e.g:

/app/env/system/key1=val1

/app/env/system/key2=val2

there are 2 ways to access these value from lambda

option 1

declare the key in sam parameter

Parameters:

Key1:

Description: the key 1 desc

Type: AWS::SSM::Parameter::Value<String>

Default: /app/env/system/key1

when we deploy the sam application, parameter Key1's value will be replaced by val1

option 2

from aws_lambda_powertools.utilities import parameters

value = parameters.get_parameter("/app/env/system/key1")

value will be "val1"

we could also retrieve a collection of items

items = parameters.get_parameters("/app/env/system")

for k, v in values.items():

logger.debug(f"{k} -> {v})