Today I got a bunch of messages from the other end of the world , my co-workers are all located in the other end of the world, I work when they sleep, they work when I sleep, we only have a little time cross to discuss issues. today's issue is interesting, we are using the approach I described in Managing EKS Worker nodes dynamically by AWS Auto Scaling Groups to manage the EKS worker nodes but with more fine control, we want to terminate worker nodes in ASG by selecting label, there is no issue on the logic, but there is a strange behaviour when trying to terminate some node, when we terminated some nodes with selected label, it terminate that node, but it also terminate some other EKS worker nodes unexpectedly.

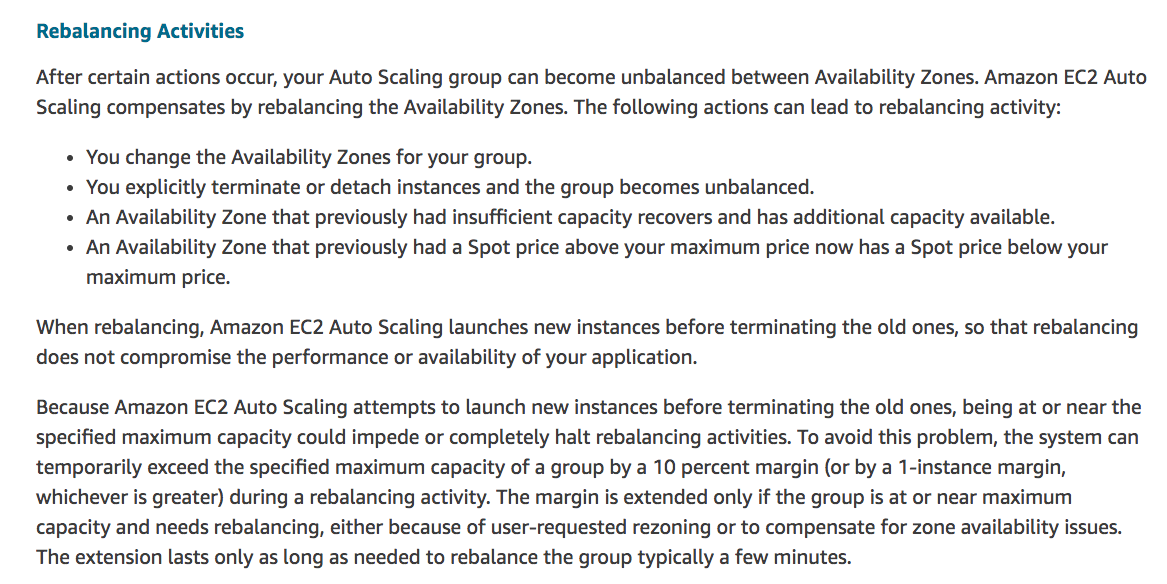

When I was called to check this issue I suddenly remember that ASG has a feature named AZRebalance in the Scaling Process, words in short is that if the ASG is cross different AZ(Availability Zone), by default it will try to rebalance the ec2 instnace in the ASG to make sure the ec2 instances are balanced across AZ, below is the official document from AWS

This is exactly what happend for us, when we terminate any ec2 worker nodes in ASG and ASG detect it's not AZBalaneced, so ASG will try to promote more instnace and terminate other instnaces to make the ASG AZBalanced.

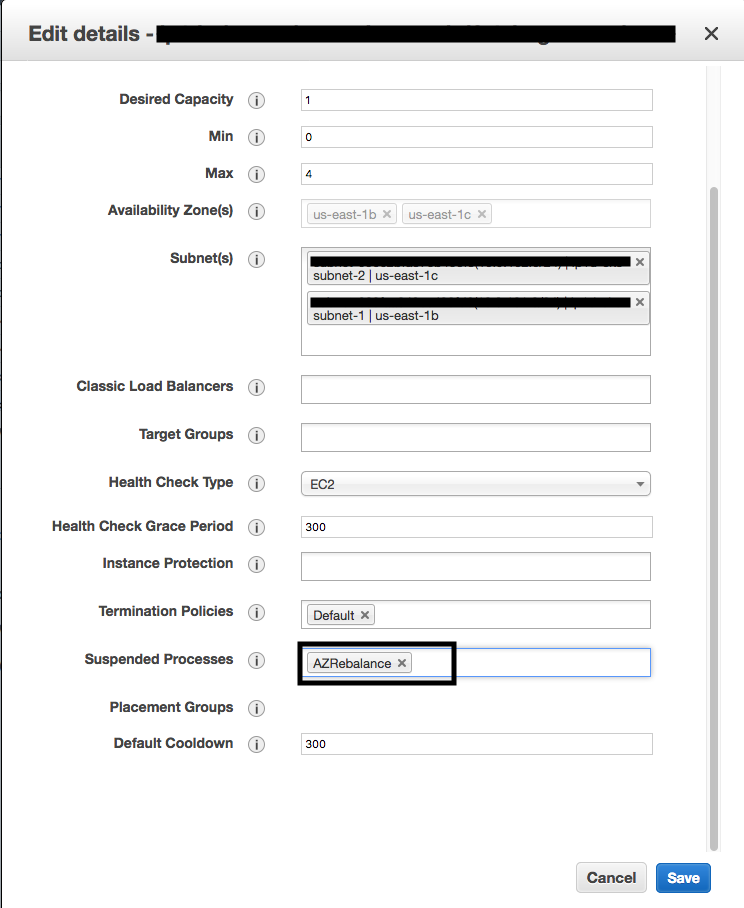

to fix this issue is easy, we could suspended the AZRebalance process for selected ASG